Critical Reflections on the 2018 World Development Report: If Learning is so Important then Why Can’t the World Bank Learn?

This post was prepared in response to the recent publication of the 2018 World Development Report, LEARNING to Realize Education’s Promise, by Hikaru Komatsu and Jeremy Rappleye of Kyoto University Graduate School of Education. Their recent publications on international learning assessments include “Did the Shift to Computer-Based Testing in PISA 2015 affect reading scores? A View from East Asia” (Compare, 2017) and “A PISA Paradox? An Alternative Theory of Learning as a Possible Solution for Variations in PISA Scores” (Comparative Education Review, 2017). They can both be reached at: rappleye.jeremy.6n@kyoto-u.ac.jp.

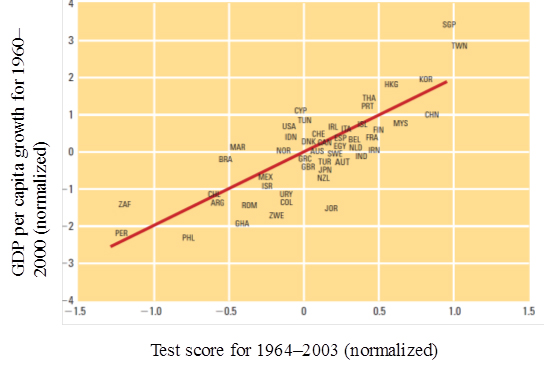

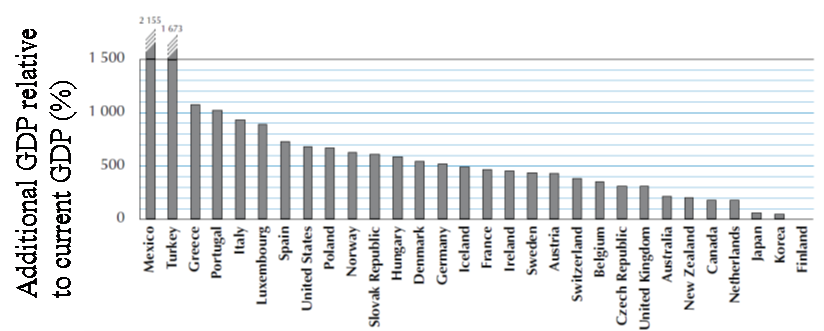

Improving PISA test scores guarantees future economic growth. This is a hypothesis widely propounded and elaborated by World Bank and OECD reports over the past two decades (Hanushek and Woessmann 2007, 2010, 2015). The empirical underpinning of this claim originates from the work of the US-based economist Eric Hanushek and his various colleagues over the years. Hanushek purportedly found that that students’ test scores for 1964-2003 were strongly correlated with economic growth for 1960-2000 (Figure 1). Using this correlation, Hanushek and colleagues then projected future economic growth induced by reforms targeting improved PISA test scores for various countries (Figure 2). This future projection has been one major influence in convincing more and more countries to participate in the PISA exercise. PISA tests are believed to provide policymakers in participant countries a precise measure to understand the degree to which their countries are successfully raising the ‘knowledge capital’ of their domestic workforce and thus securing future economic growth.

Figure 1. Relationship between test scores for 1964-2003 and GDP per capita growth for 1960-2000. Test scores and economic growth were conditioned considering differences in GDP per capita among countries. Source: Hanushek and Woessmann 2007, p.7 with additions from authors.

Figure 2. Additional GDP until 2090 when the test score for a given country reaches the level of Finland. Source: Hanushek and Woessmann 2010, p.7 with additions from authors.

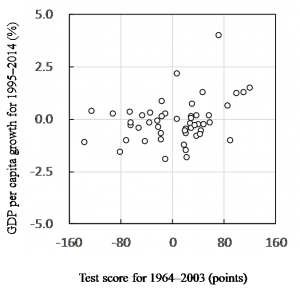

However, the analysis made by Hanushek and colleagues has been proven to be problematic, if not all together false. Hanushek compared students’ cognitive skills for a given period (1964-2003) with economic growth for approximately the same period (1960-2000). Yet logically it takes at least a few decades for students to occupy a large portion of the workforce. Students’ test scores for a given period should thus be compared with economic growth in subsequent periods. Our previous study carried out this more appropriate comparison (Komatsu and Rappleye 2017), revealing that the relationship between test scores for a given period (1964-2003) and economic growth for a subsequent period (1995-2014) was virtually absent (Figure 3). We argued that it was thus unreasonable to conclude that improving PISA test scores guarantees higher future economic growth.

Figure 3. Relationship between test scores for 1964-2003 and GDP per capita growth for 1995-2014. Test scores and economic growth were conditioned considering differences in GDP per capita among countries. Source: Komatsu and Rappleye 2017, p.178.

We were pleased to find that the recently unveiled World Development Report, Learning to Realize Education’s Promise (World Bank 2018), followed our methodological lead: it compared test scores for a given period (1964-2003) with economic growth in a subsequent period (1970-2015). This stands in contrast with the previous report written by Hanushek and colleagues on behalf of the Bank (e.g., Hanushek and Woessmann 2007). The World Bank has apparently learned—although it is unclear if this is the result of our critique or some other input—that test scores for a given period should be compared with economic growth for a subsequent period.

Nevertheless, the World Bank’s learning has not gone nearly far enough. The first problem is that the economic growth period (1970-2015) that Bank analysts used in the 2018 World Development Report is still not appropriate or accurate. For example, the generation who attended the first international test in 1964 was approximately age 21 in 1970. That is, the majority of the workforce consisted of older generations at this stage. The World Bank should have used a later period, as we did. This is the primary reason why the World Bank still came up with a moderate correlation (Figure 4). This correlation was not as weak as ours (Komatsu and Rappleye 2017), but not as strong as that of Hanushek and Woessmann (2007).

Figure 4. Relationship between test scores for 1970-2015 and GDP per capita growth for 1995-2014. Test scores and economic growth were conditioned considering differences in GDP per capita among countries. Source: World Bank, 2018, p.46 with additions from authors.

The second problem – and much more disappointing by far – is that the World Bank has continued to use the correlation reported by Hanushek and colleagues to predict future economic growth for various countries, as shown in Figure 5 (World Bank 2018, p.47). Realizing the problem of the correlation analysis made by Hanushek, the World Bank had already (re)examined the correlation between test scores and economic growth. The World Bank could have easily revised the future projection accordingly using the results of the analysis. That fact that it did not implies that the World Bank has intentionally continued using the Hanushek correlation for projecting future growth, even though it has implicitly acknowledged that the Hanushek calculation is not accurate.

Figure 5. Additional GDP until 2090 when the PISA score for a given country is 400 points and when the score is 546 points. Source: World Bank, 2018, p.47 with additions from authors.

While utilising the Hanushek correlation indeed makes the official storyline of the entire report straightforward, it makes the careful reader feel that the statistics are being selected more for rhetorical purposes than for accuracy. It is disappointing that the World Bank has failed to learn not only from our findings (published in March 2017), but also from its own new findings. Should the World Development Report thus be viewed as a record of evidence learned? Or should it be viewed as a document of political persuasion underpinned by a failure to learn?

The Foreword of the Report, written by the World Bank President Jim Yong Kim, states that “[w]hat is important, and what generates a real return on investment, is learning and acquiring skills” (p.xi). He continues that “achieving learning… will require… assess[ment of] learning to make it a serious goal” (p.xii). If the World Bank really believes in this narrative, institutional learning at the Bank has a long way to go. We suggest that scholars need to continually ‘assess’ levels of World Bank learning to both prod the institution to continue learning and ensure that its so-called evidence is not used for political persuasion. It is crucial that the World Bank learns how to learn again: the stakes are increasingly high as OECD-World Bank collaborations on learning measures, such as PISA for Development, continue apace.

We emphasize that only when open communication and mutual learning between the World Bank, scholars, practitioners and other stakeholders occurs continuously, will we be able to “realize education’s promise” as the new Report invites us to do.

As a first step in that direction, we would welcome a dialogue on the points raised here and in our previous NORRAG blog with someone inside the World Bank, preferably by the authors of the World Development Report. We look forward to continued learning through that exchange.

References

Hanushek, E. A., and L. Woessmann. 2007. Education Quality and Economic Growth. Washington, DC: The World Bank.

Hanushek, E. A., and L. Woessmann. 2010. The High Cost of Low Educational Performance: The Long-Run Economic Impact of Improving PISA Outcomes. Paris: OECD.

Hanushek, E.A., and L. Woessmann. 2015. Universal Basic Skills: What Countries Stand to Gain. Paris: OECD.

Komatsu, H., and J. Rappleye. 2017. A New Global Policy Regime Founded on Invalid Statistics? Hanushek, Woessmann, PISA, and Economic Growth. Comparative Education 53 (2): 166-191.

World Bank. 2018. LEARNING to Realize Education’s Promise (World Development Report). Washington: The World Bank.

Disclaimer: NORRAG’s blog offers a space for dialogue about issues, research and opinion on education and development. The views and factual claims made in NORRAG posts are the responsibility of their authors and are not necessarily representative of NORRAG’s opinion, policy or activities.

Contribute: The NORRAG Blog provides a platform for debate and ideas exchange for education stakeholders. Therefore if you would like to contribute to the discussion by writing your own blog post please visit our dedicated contribute page for detailed instructions on how to submit.

Pingback : Critical Reflections on the 2018 World Development Report – Capacity Building for Sustainable Development