How (Un)representative Are China's Stellar PISA Results?

This NORRAG Highlights is contributed by Rob J. Gruijters, is University Lecturer at the Faculty of Education, University of Cambridge. In this post, the author argues that China’s PISA results are unrepresentative because they only take into consideration Beijing, Shanghai, Jiangsu and Zhejiang, the four wealthiest states of the country. He suggests that enabling China to submit selective results defeats the purpose of the PISA examinations, and that the OECD should change this practice.

PISA has released its most recent results and, like in the previous edition, China ranked first in all three subjects, scoring an astonishing 49 points higher than the number 2 in Science (Singapore), 22 points higher in Math, and 6 points higher in Reading. Observers were quick to point out, however, that the results for China are based on surveys in only four out of mainland China’s 32 provincial-level administrative units: Beijing, Shanghai, Jiangsu and Zhejiang (B-S-J-Z). As in previous years, the OECD was heavily criticized for allowing China to submit results that do not represent the whole country—contrary to the other 78 countries participating in the assessment. It was noted that the four provinces in which pupils were tested contain China’s most wealthy metropolitan areas, which have the best schools. Moreover, it has been claimed that PISA surveys exclude children from China’s huge internal migrant population, an assertion denied by PISA.

Because the nuances of sample selection are often lost in media coverage of the results, critics argued that China was allowed to “cheat” on the assessment, resulting in a “huge propaganda victory”. Indeed, some Chinese newspapers used the results for nationalist purposes, although many others had a more balanced view. A second concern is that B-S-J-Z’s exceptional performance could result in unwarranted calls for other countries to imitative aspects of China’s education system—a phenomenon that can already be observed. Andreas Schleicher, Director for Education and Skills and Special Advisor on Education Policy to the Secretary-General at the OECD, seems indifferent to either of these concerns: in his view, the critics are simply sore losers.

Is B-S-J-Z representative of China?

There are good reasons to believe that Beijing, Shanghai, Jiangsu and Zhejiang are a poor reflection of the overall Chinese population: they are located in the prosperous Eastern part of the country, and are ranked among the five wealthiest provinces in the country. Moreover, there are well-known regional disparities in the quality of schools, with schools in wealthy urban areas receiving far more resources than their poor and rural counterparts. On the other hand, it has been noted that Chinese students tend to have high aspirations regardless of their economic and social circumstances, and some reports suggest that poor students study harder than their wealthier counterparts. Families from disadvantaged regions understand that education offers their best chance for social mobility, and correspondingly urge their children to perform well in school.

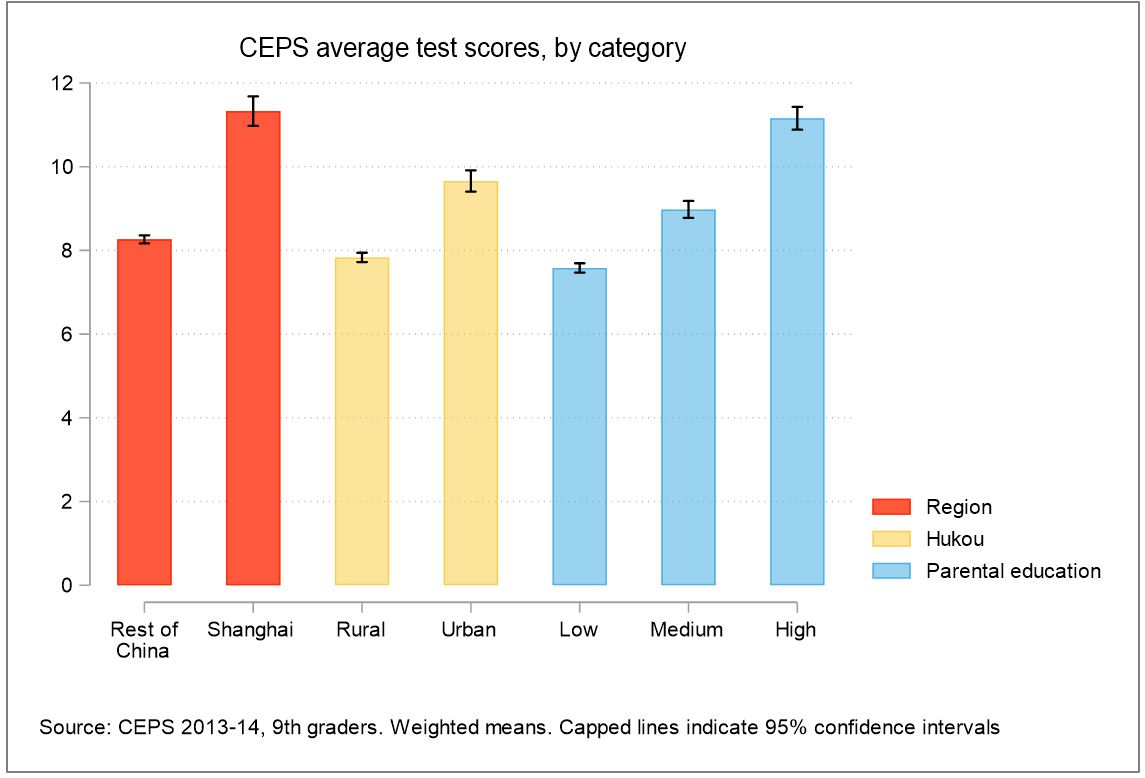

Because China does not conduct nationally representative PISA surveys, however, it remains unclear how (un)representative the B-S-J-Z data really are. Fortunately, there are other surveys that we can use to assess how learning outcomes differ between regions in China, especially the 2013-14 China Education Panel Survey (CEPS), which covers roughly the same age group as the PISA surveys (9th graders) and claims to be nationally representative. The only problem, however, is that the CEPS refuses to disclose province names in its publicly accessible data because it would be “unfair to those who rank at the bottom” and “will bring difficulties to subsequent CEPS surveys”, highlighting the political sensitivity of the issue. Because the CEPS contains an additional sample from Shanghai, however, we can at least compare the performance of Shanghai children to their peers in the rest of the country. In the figure below, I plot the average scores in the CEPS composite skills test (administered to 8,935 ninth-graders) for the Shanghai sample and the rest of China. For illustrative purposes, I compare this gap to differences by household registration (hukou) and parental education, two commonly used markers of inequality in the Chinese context.

Shanghai children score on average 3.06 points, or 0.75 Standard Deviations (S.D.) better than the rest of the country. Living in Shanghai therefore provides a similar advantage to children as having college-educated parents instead of primary-school educated parents. In PISA terms, a gap of 0.75 SD is substantial—equivalent to a difference of 67 points (taking the 2018 B-S-J-Z SD of 89 points as a reference), roughly the distance between B-S-J-Z and Estonia, the number 8 on the math ranking. Of course, Shanghai is only one of four provinces surveyed in PISA, and the CEPS test is not benchmarked to PISA or other international assessments, so the results are not strictly comparable. It is clear, however, that Shanghai—and probably the other three surveyed provinces—are positive outliers in terms of test scores in China.

What explains China’s improved results?

A second controversy relates to China’s almost unbelievable ascent in the PISA ranking between 2015 and 2018. In the 2015 PISA round, China participated as Beijing-Shanghai-Jiangsu-Guangdong (B-S-J-G), and was ranked 6th in Math, 27th in Reading and 10th in Science, respectively. In 2018, Guangdong was dropped in favour of Zhejiang, a wealthy coastal province close to Shanghai. No reason was provided for the change by either the OECD or the Chinese authorities. The new constellation obtained far higher scores than the previous one and was ranked first in all three subjects. It remains unclear whether the higher 2018 scores reflect a genuine increase in academic performance, or merely the substitution of Guangdong for Zhejiang. Chinese education experts have argued that Guangdong is more representative of China as a whole, containing wealthier as well as poorer areas. The table below shows that Guangdong is almost as large as the three other 2015 provinces combined, while Zhejiang is much smaller.

| Province | Inhabitants (millions) |

| Beijing (2015+2018) | 21.5 |

| Shanghai (2015+2018) | 24.2 |

| Jiangsu (2015+2018) | 80.1 |

| Zhejiang (2018 only) | 57.4 |

| Guangdong (2015 only) | 113.4 |

Source: China Statistical Yearbook 2019

Because of its population size, Guangdong would have had a major impact on the 2015 scores, and in its absence, the results from Beijing, Shanghai and Jiangsu will carry relatively more weight. It is entirely plausible that this explains the abnormal increase between 2015 and 2018. In order to confirm this empirically, we could calculate how much the three provinces that took part in both rounds (B-S-J) improved between 2015 and 2018, and how they differed from Guangdong and Zhejiang respectively. Unlike most other countries, however, China does not enable the calculation of province-level results, replacing the province indicators by ‘Undisclosed Stratum’ in the PISA dataset. Unless the OECD releases this information, it will be impossible to tell whether ‘China’s’ improved performance was a statistical artefact.

Conclusion

The purpose of PISA is to provide standardized measures of educational performance that are comparable across countries as well as within countries over time. Enabling China to submit selective results, and then to change the criteria for selection between rounds, completely defeats this purpose. It is in the academic and the public interest to have reliable and internationally comparable data on educational outcomes in China, the largest country in the world. This can only be achieved if China submits nationally representative results including regional indicators, and the OECD should insist that it does so.

About the Author: Rob J. Gruijters is University Lecturer at the Faculty of Education, University of Cambridge

Contribute: The NORRAG Blog provides a platform for debate and ideas exchange for education stakeholders. Therefore, if you would like to contribute to the discussion by writing your own blog post please visit our dedicated contribute page for detailed instructions on how to submit.

Disclaimer: NORRAG’s blog offers a space for dialogue about issues, research and opinion on education and development. The views and factual claims made in NORRAG posts are the responsibility of their authors and are not necessarily representative of NORRAG’s opinion, policy or activities.